"Revolutionary Framework Achieves Animal-Like Agility in Quadrupedal Robots"

July 13, 2024 feature

This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

- fact-checked

- peer-reviewed publication

- trusted source

- proofread

by Ingrid Fadelli , Tech Xplore

Four-legged animals are innately capable of agile and adaptable movements, which allow them to move on a wide range of terrains. Over the past decades, roboticists worldwide have been trying to effectively reproduce these movements in quadrupedal (i.e., four-legged) robots.

Computational models trained via reinforcement learning have been found to achieve particularly promising results for enabling agile locomotion in quadruped robots. However, these models are typically trained in simulated environments and their performance sometimes declines when they are applied to real robots in real-world environments.

Alternative approaches to realizing agile quadruped locomotion utilize footage of moving animals collected by motion sensors and cameras as demonstrations, which are used to train controllers (i.e., algorithms for executing the movements of robots). This approach, dubbed 'imitation learning,' was found to enable the reproduction of animal-like movements in some quadrupedal robots.

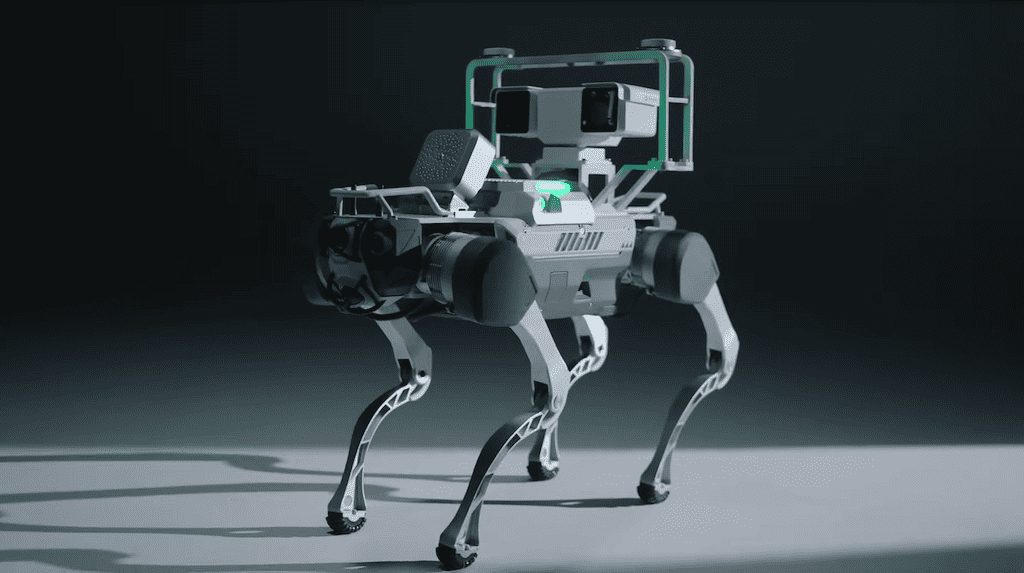

Researchers at Tencent Robotics X in China recently introduced a new hierarchical framework that could facilitate the execution of animal-like agile movements in four-legged robots. This framework, introduced in a paper published in Nature Machine Intelligence, was initially applied to a quadrupedal robot called MAX, yielding highly promising results.

'Numerous efforts have been made to achieve agile locomotion in quadrupedal robots through classical controllers or reinforcement learning approaches,' Lei Han, Qingxu Zhu and their colleagues wrote in their paper. 'These methods usually rely on physical models or handcrafted rewards to accurately describe the specific system, rather than on a generalized understanding like animals do. We propose a hierarchical framework to construct primitive-, environmental- and strategic-level knowledge that is all pre-trainable, reusable and enrichable for legged robots.'

The new framework proposed by the researchers spans across three stages of reinforcement learning, each of which focuses on the extraction of knowledge at a different level of locomotion tasks and robot perception. The team's controller at each of these learning stages is referred to as primitive motor controller (PMC), environmental-primitive motor controller (EPMC) and strategic-environmental-primitive motor controller (SEPMC), respectively.

'The primitive module summarizes knowledge from animal motion data, where, inspired by large pre-trained models in language and image understanding, we introduce deep generative models to produce motor control signals stimulating legged robots to act like real animals,' the researchers wrote. 'We then shape various traversing capabilities at a higher level to align with the environment by reusing the primitive module. Finally, a strategic module is trained, focusing on complex downstream tasks by reusing the knowledge from previous levels.'

The researchers evaluated their proposed framework in a series of experiments, where they applied it to a quadrupedal robot called MAX. Specifically, two MAX robots were made to compete in a tag-like game and the framework was used to control their movements.

'We apply the trained hierarchical controllers to the MAX robot, a quadrupedal robot developed in-house, to mimic animals, traverse complex obstacles and play in a designed, challenging multi-agent chase tag game, where lifelike agility and strategy emerge in the robots,' the team wrote.

In their initial tests, the researchers found that their model allowed the MAX robot to successfully traverse different environments, performing agile movements that resemble those of animals. In the future, the model could be adapted and applied to other four-legged robots, potentially facilitating their deployment in real-world environments.

More information: Lei Han et al, Lifelike agility and play in quadrupedal robots using reinforcement learning and generative pre-trained models, Nature Machine Intelligence (2024). DOI: 10.1038/s42256-024-00861-3

© 2024 Science X Network